Monitor system with SRE mindset

We all changed. From monolithic to microservices, from waterfall to Agile, from “developers vs operators” to DevOps. We accept the fact that our system can be more complex, people in team have to have more skills to go faster, but how fast can we go?

Monitoring? Sure!

Monitoring is a undeniable feature that every system need. Even monitoring is an old issue and we all agree about its needed, but when everything are changed, should monitoring change too?

Actually, monitoring is a data-driven model for operators. We go to collect information from every distributed nodes, push them into a general storage, build dashboard and setup alert for metrics. It can be done in style with the combination of Prometheus and Grafana

This is really cool for sure. I built many dashboards for my team. From node information like RAM, Disk Usage, CPU … to service information such as ElasticSearch, ActiveMQ, MongoDB… I also built something like Apdex Score for our API endpoints along with error rate, traffic, latency… I look so full featured for operation, we have dashboards for everything, but this is our problem. We have to watch dashboards about everything.

Yes, it hurts my eyes a lot. So I decide to build some overview dashboards, gather only important metrics to avoid noise. OK, sound great, it should work I think. However, what metrics are important? What metrics can help we make decisions? For example, disk usage is a key metrics for sure, we can agree it with no doubt. But when one of my node get full of disk, is it a serious problem? Should I wake our operators up at night to resolve it? Sometimes, a full disk node can go down, that make many services go down too. The services go down sounds bad, but is it really bad? I mean, if our load balancer work well, and no user relize that services go down, then full of disk sound not too bad as we think before.

So, the question is: What metrics are REALLY important?

What is SRE?

While going to find the answer for our question, we meet SRE.

SRE stands for Site Reliability Engineering - a set of actions and experiments shared by Google to build, deploy, monitor, and maintain system. According to Google, DevOps is an interface, it define many mindset but there is no implementation. That’s the reason why they define SRE - as an implementation of DevOps

1 | class SRE implement DevOps |

There are three definitions to remember. SLO, SLI and error budget. This is a long story which I don’t want to rewrite, read it if you want. It’s free for online version at https://landing.google.com/sre/book.html

Monitor system with SRE mindset

This book contains one interested thing, which can be the answer for the question about monitoring, called SLO. So, what is SLO. SLO stands for Sevice Level Objective, so it is objective of our service. This should be objectives which are defined and agreed by both technical team and product team, which are really matter for our product.

Easy to see, full of disk is not a clear objective for product, our manager have no idea about it even it sometimes can make a disaster, but still hard to see. At this time, we sat down and see what is our objectives, what are really affected our product. For example, an objective every team should commit is server up time, 99.99% uptime for service.

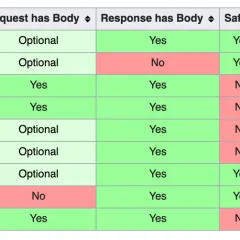

After defined objective is 99.99% uptime, we need to define what is uptime. Technically, we call a HTTP request to our website, 200 is uptime, others is downtime. This definition called SLI (Service Level Indicator).

With this SLI, repeat it every second then we have a list of checking result, we can calculate our uptime rate, this is our current SLO. Look at current SLO, compare with the objective we set at the beginning, closer is better, higher is more than expectation.

However, we still need to set alerts for this SLO when it’s going to cross the line. Let meet error budget. If our SLO is 99.99%, that means our error budget is 0.01%. To be clear, we will have unavailable time is

1 | Daily: 8.6s |

Now we can define condition to fire an alert based on our burning budget speed. If we have 4s continous downtime, it is 50% error budget for today, we should fire an alert. This is clear, when we have tough SLO, we have to have a tough rate SLI too. SLI in this case should send HTTP request per second.

Summary:

To sum up, to monitor system based on SRE, we should:

- Define an expected objective.

- Create SLI to collect data to calculate current SLO.

- Calculate Error budget based on SLO too.

- Fire an alert when error budget is burning too fast.

How fast can we go? Look at our error budget.

Along with built dashboards to reveal systems status, now we have a recipe to create alerts which is really matters.

P/S: In SRE, Google separate alerts in many levels such as ticket or on-call, but I think alerting means on-call, another should be called as warnings only.